How does Pacemaker Corosync cluster operates? What is the relation between pacemaker and corosync? The functionality and concept overview has been explained here. SUSE Linux high availability and Redhat high availability using pacemaker corosync cluster majorly. Still many other flavors such as Ubuntu, Debian are using pacemaker with corosync as their high availability solution.

What is Pacemaker?

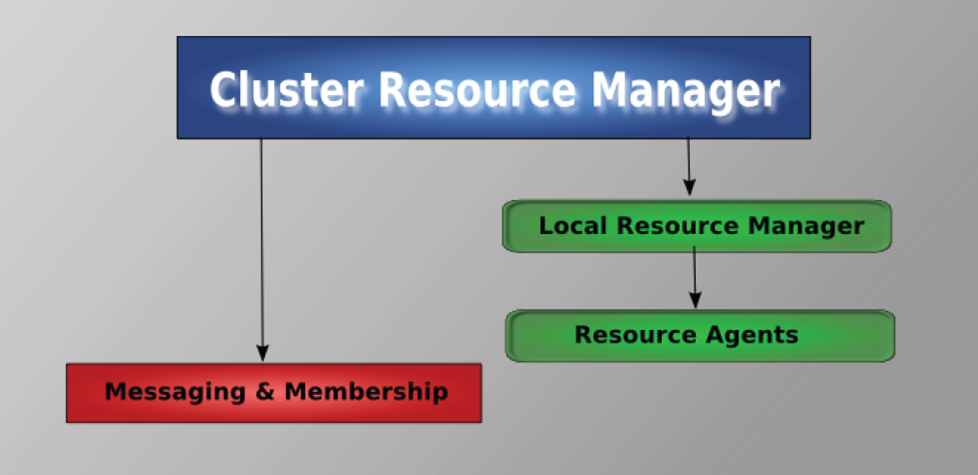

Pacemaker is a cluster resource manager. It achieves maximum availability for your cluster resources by detecting and recovering from node and resource-level failures by making use of the messaging and membership capabilities provided by your preferred cluster infrastructure (either Corosync or Heartbeat).

Use of Corosync

Corosync provides cluster infrastructure functionality. Corosync provides messaging and membership functionality. Corosync maintains the quorum information. This feature has been utilized by pacemaker to provide high availability solution.

Pacemaker corosync cluster conceptual overview

Pacemaker Components

- Non-cluster aware components (illustrated in green).

These pieces include the resources themselves, scripts that start, stop and monitor them.

- Cluster Resource manager

Pacemaker provides the brain that processes and reacts to events regarding the cluster

- Low level infrastructure

Corosync provides reliable messaging, membership and quorum information about the cluster

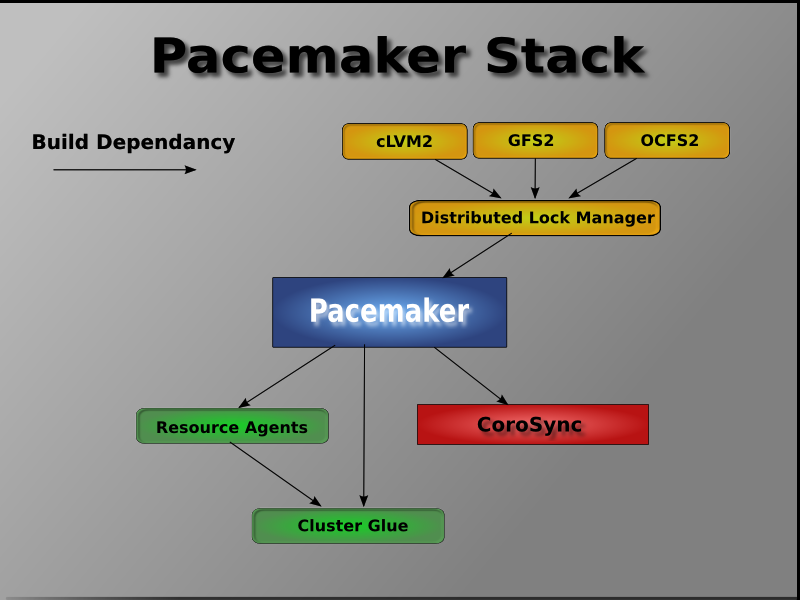

Pacemaker Stack

The pacemaker corosync cluster called as pacemaker stack. The relation between kernel DLM (Distributed Lock Manager) and pacemaker stack has been illustrated here. Almost all the Linux kernel by default comes with DLM. DLM provides locking feature which will be used by cluster aware filesystems. The GFS2 (Global File System2) and OCFS2 (Oracle cluster File System 2) are called as cluster aware filesytem. To access single filesytem by multiple hosts you need to have either GFS2 or OCFS2. If not any other filesytem created on top of CLVM volume group required. CLVM stands for Clustered Logical Volume Manager.

Have a break if you feel stressed. Because lot more still.

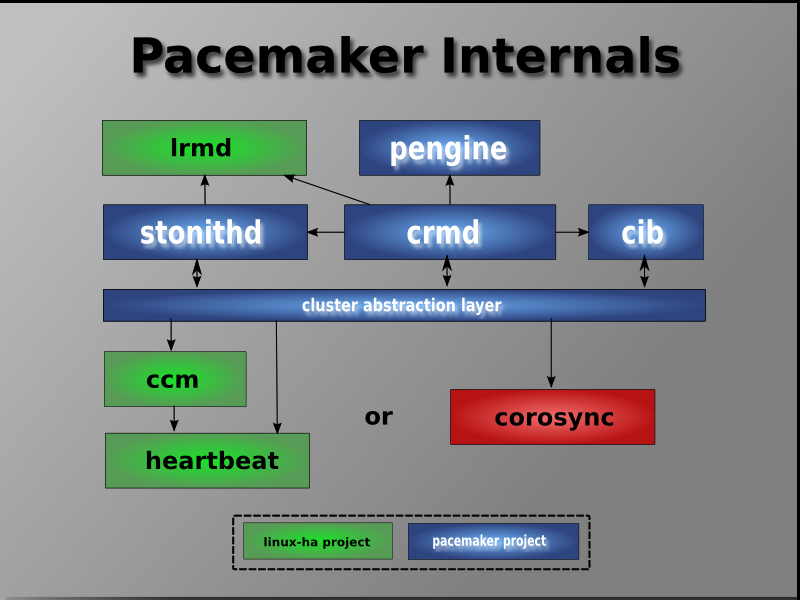

Pacemaker Internals

Before learning configuration of pacemaker corosync cluster it is good to know about its key components.

- CIB (Cluster Information Base)

- CRMd (Cluster Resource Management daemon)

- PEngine (PE or Policy Engine)

- STONITHd

The image shows how each component of pacemaker been interlinked.

Cluster Information Base (CIB)

The Cluster Information Base is an in memory XML representation of the entire cluster configuration and current status. It contains definitions of all cluster options, nodes, resources, constraints and the relationship to each other. The CIB also synchronizes updates to all cluster nodes. There is one master CIB in the cluster, maintained by the Designated Coordinator (DC). All other nodes contain a CIB replica.

Designated Coordinator (DC)

One CRM in the cluster is elected as Designated Coordinator (DC). The DC is the only entity in the cluster that can decide that a cluster wide change needs to be performed, such as fencing a node or moving resources around. The DC is also the node where the master copy of the CIB is kept. All other nodes get their configuration and resource allocation information from the current DC. The DC is elected from all nodes in the cluster after a membership change.

Policy Engine (PE)

Whenever the Designated Coordinator needs to make a cluster wide Change (react to a new CIB), the Policy Engine calculates the next state of the cluster based on the current state and configuration. The PE also produces a transition graph containing a list of (resource) actions and dependencies to achieve the next cluster state. The PE always runs on the DC.

Cluster Resource Manager (CRM)

Every action taken in the resource allocation layer passes through the Cluster Resource Manager. If other components of the resource allocation layer (or components which are in a higher layer) need to communicate, they do so through the local CRM. On every node, the CRM maintains the Cluster Information Base (CIB).

Local Resource Manager (LRM)

The LRM calls the local Resource Agents on behalf of the CRM. It can thus perform start / stop / monitor operations and report the result to the CRM. It also hides the difference between the supported script standards for Resource Agents (OCF, LSB). The LRM is the authoritative source for all resource related information on its local node.

Resource Agents

Resource Agents are programs (usually shell scripts) that have been written to start, stop, and monitor a certain kind of service (a resource). Resource Agents are called only by the LRM. Third parties can include their own agents in a defined location in the file system and thus provide out of the box cluster integration for their own software.

Resource

Any service or software configured in cluster is called as resource. For example NFS service, filesystem, applications are resources for the cluster.

Member Node

The server computer which is part of the cluster called as member node.

What Is STONITH?

- STONITH is an acronym for “Shoot-The-Other-Node-In-The-Head”.

- It protects your data from being corrupted by rogue nodes or concurrent access.

- Just because a node is unresponsive, this doesn’t mean it isn’t accessing your data. The only way to be 100% sure that your data is safe, is to use STONITH so we can be certain that the node is truly offline, before allowing the data to be accessed from another node.

- STONITH also has a role to play in the event that a clustered service cannot be stopped. In this case, the cluster uses STONITH to force the whole node offline, thereby making it safe to start the service elsewhere.

How the two node pacemaker corosync clusters operates?

Resources are monitored as per configuration in CIB. The CRMd daemon in DC nodes does take decision and initiate commands. The DC node refers policy engine and take decision accordingly.

For example take two node cluster which is configured to provide Apache web service. Cluster does numerous operations. But below are the overview of main actions.

- New membership will be formed with available nodes. The available members elect any one node as DC.

- Policy engine and CIB will be actively available only on DC node. Rest all has same version of passive copy.

- DC checks quorum status. If quorum achieved proceed next.

- CRMd in DC node initiate start command for resources. LRMd in each node receives and process the start operation and gives back the status to CRMd.

- CRMd update CIB and validate the status. If the output not as expected it check with policy engine and proceed to next operation such as recovery or restart.

- The fourth and fifth step continues.

- The command and status transition uses underlying corosync messaging layer. It is also called as infrastructure layer.

- When the DC node fails next available node will be elected as DC automatically.

Refer the Pacemaker corosync cluster log which gives you interesting information.

SUSE high availability solution use pacemaker corosync cluster. The basic hardware and software requirement to setup a cluster been listed here. I collected this information from SUSE Enterprise Linux HAE 11 (SLE HAE 11) reference document. Please do not forget to refer your version of support matrix.

SUSE HAE – Hardware Requirements

- 1 to 32 Linux servers. The servers do not require identical hardware (memory, disk space, etc.), but they must have the same architecture. Cross platform clusters are not supported.

- At least two TCP/IP communication media. Cluster nodes use multicast or unicast for communication so the network equipment must support the communication means you want to use. Preferably, the Ethernet channels should be bonded.

- Optional: A shared disk subsystem connected to all servers in the cluster from where it needs to be accessed.

- A STONITH mechanism.

- Important: No Support without STONITH

SUSE HAE – Software Requirements

- SUSE® Linux Enterprise Server 11 SP4 with all available online updates installed on all nodes that will be part of the cluster. The most latest version is SLES 12 SP1.

- SUSE Linux Enterprise High Availability Extension 11 SP4 including all available online updates installed on all nodes that will be part of the cluster. The available latest version is SLE HAE 12 SP1.

SUSE HAE – Other Requirements

- Time Synchronization

- NIC Names must be identical on all nodes.

- All cluster nodes must be able to access each other via SSH.

- Hostname and IP Address

- Use static IP addresses

- List all cluster nodes in /etc/hosts with their fully qualified hostname and short hostname to avoid dependency with DNS.

Does this whitepaper help you? If you have anything to say, please leave comment below.

Useful Links

Home of Pacemaker and guide documents for configuration – http://clusterlabs.org/doc/

The Corosync project website – http://corosync.github.io/corosync/

SUSE Enterprise Linux High Availability Architecture – https://www.suse.com/documentation/sle_ha/book_sleha/data/sec_ha_architecture.html

Thanks a lot very much for the high your blog post quality and results-oriented help. I won’t think twice to endorse to anybody who wants and needs support about this area.

Well written. Thanks a lot for the details.

Most welcome. Glad to know that article being useful.

-TMS